In recent years, the emergence of technologies like Gemini and GPT-5 has dramatically improved the accuracy of AI translation. However, when attempting to integrate these into your own services or business systems, serious operational challenges arise that go beyond simply the quality of translation accuracy.

1. Limitations Imposed by the Fundamental Requirement of API Integration

When automating or systematizing translation, you can't simply copy and paste manually into the UI. Basically, you will need to call the AI via an API (Application Programming Interface).

However, an API is an independent process that returns one response for each request. It does not "remember previous translations" like a human would. This stateless (does not retain state) nature leads to the following issue of "consistency."

2. Changing "Tone" and "Terminology" with Each API Call

AI (especially LLM-based models) tend to produce slightly different outputs for the same sentence, depending on parameters like temperature or how the context is interpreted at that moment.

- Mixing of polite and plain forms

- Certain technical terms are sometimes translated as A, and other times as B

To prevent this, it is essential to build a control layer that dynamically injects a glossary during API calls and strictly specifies the tone in the system prompt.

3. Caught Between "Cost" and "Quality": The Challenge of Partial Updates

When translating frequently updated content such as websites or manuals, re-translating the entire text every time is inefficient.

- Cost issue: Even if only one line is modified in a document with tens of thousands of characters, re-translating the entire text will result in enormous API costs.

- Quality issue: If you retranslate the entire text, there is a risk that even the wording of sections that were not modified will change.

Therefore, a mechanism to identify and translate only the updated sections (differential translation) is necessary, but this creates the next major challenge.

4. Context Disconnection: The Pitfalls of Patchwork Translation

If you only send the updated sections to the AI, it cannot understand the "context before and after" those parts.

Example: Even though the previous sentences are written in a formal style, only the newly added sentence is translated in a polite style. Alternatively, if the AI cannot determine what a pronoun (such as "it," "he," or "she") refers to, mistranslations can occur.

To prevent this kind of "patchwork" translation, it is necessary to implement an advanced system that provides not only the updated segments to be translated, but also the surrounding existing translations as context (background information) to the AI.

Approaches to Solving These Challenges

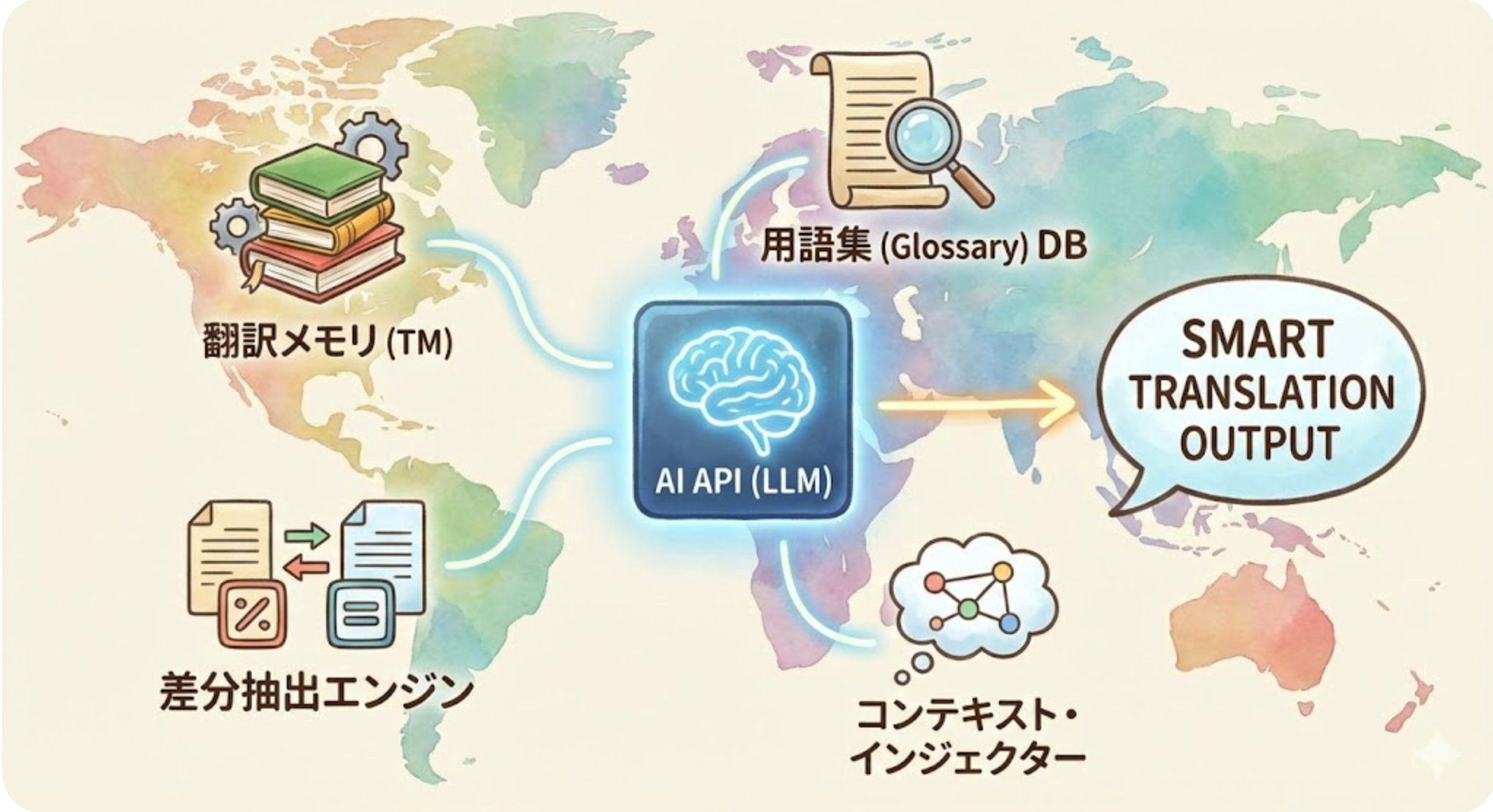

To address these issues, it is necessary to take a “translation orchestration” approach like the ones below, rather than simply making API calls.

- Utilizing Translation Memory (TM): Store past translation results in a database, avoid retranslating identical sentences, and refer to similar sentences as needed.

- RAG (Retrieval-Augmented Generation) Approach: Dynamically inject glossaries and past best practices into prompts during translation.

- Context injection: When performing differential translation, provide several lines before and after as "reference information" to the API to ensure consistency in tone.

Summary

Incorporating AI translation into a system is not just about converting languages; it is an engineering challenge of how to manage consistency.

Rather than blindly trusting that "AI is perfect," it is important to understand the characteristics of the API and develop a system to maintain context and manage terminology. That is the key to successfully achieving high-quality multilingual deployment.